Overview: Restricting hand movement affects the semantic processing of objects. This is a finding that supports the theory of embodied cognition.

sauce: Tokyo Metropolitan University

How do we understand words? Scientists don’t fully understand what happens when a word comes to mind. A research group led by Professor Shogo Makioka of the Graduate School of Sustainable Systems Science, Tokyo Metropolitan University wanted to test the idea of embodied cognition.

Since embodied cognition suggests that people understand the words of objects through the way they interact with them, researchers believe that if participants are restricted in the way they interact with objects, I devised a test to observe the semantic processing of words.

Words are expressed in relation to other words. For example, a “cup” could be “a glass container used for drinking”. However, to drink a glass of water, you can only use it if you hold it in your hand and bring it to your mouth, or if you know that if you drop it, it will crack on the floor.

If you don’t understand this, it will be difficult to build a robot that can handle real cups. In artificial intelligence research, these problems are known as symbol grounding problems, mapping symbols to the real world.

How do humans achieve symbol grounding? Cognitive psychology and cognitive science propose the concept of embodied cognition, in which objects are given meaning through their interaction with the body and environment. increase.

To test embodied cognition, researchers conducted an experiment in which a participant’s hand was able to move freely compared to when it was restrained. to see how participants’ brains respond to

“It was extremely difficult to establish a method for measuring and analyzing brain activity. I was able to do it,” explains Professor Makioka.

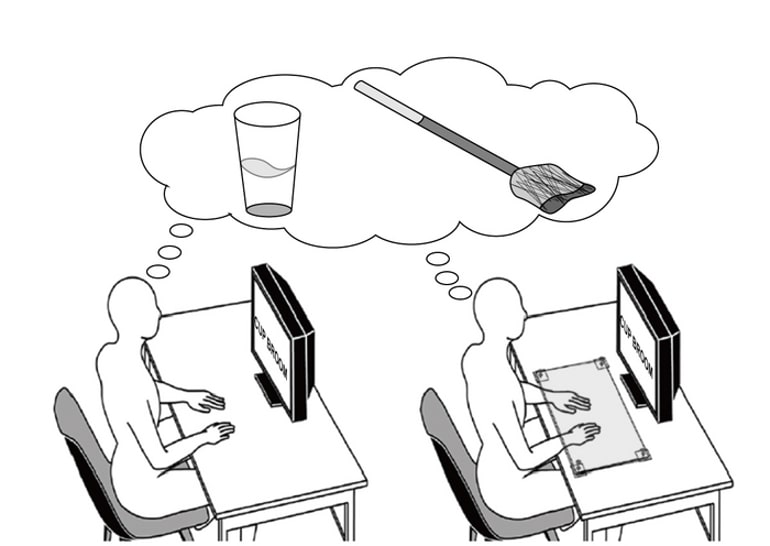

In the experiment, participants were presented with two words on a screen: “cup” and “broom.” They were asked to compare the relative sizes of the objects represented by the words and verbally say which object was larger. In this case it’s a “broom”.

Comparing words for two types of objects—manipulable objects such as “cups” and “brooms” versus non-manipulable objects such as “buildings” and “lampposts”—and how each type is handled. I observed how it was done.

During testing, participants placed their hands on a desk and were either free or restrained with a clear acrylic board. When presented with two words on a screen, participants had to consider both objects, compare their sizes, and process the meaning of each word in order to answer which one represented the larger object.

Brain activity was measured with functional near-infrared spectroscopy (fNIRS). This has the advantage that it can be measured without further physical constraints.

Measurements focused on the interparietal sulcus and inferior parietal lobules (supramarginal and angular gyrus) of the left brain, which are responsible for tool-related semantic processing.

Verbal reaction speed was measured to determine how quickly participants responded after a word appeared on the screen.

Results showed that left-brain activity in response to hand-manipulable objects was significantly reduced by hand restraint. Verbal responses were also affected by hand restraint.

These results demonstrate that restricting hand movement affects semantic processing of objects, supporting the idea of embodied cognition. These results suggest that the idea of embodied cognition may also be effective for artificial intelligence to learn the meaning of objects.

About this Cognitive Research News

author: Yoshiko Tani

sauce: Tokyo Metropolitan University

contact: Yoshiko Tani – Tokyo Metropolitan University

image: Image provided by: Osaka Metropolitan University Tokyo Makioka

Original research: open access.

“Hand Constraints Decrease Brain Activity and Affect Language Response Speed on Semantic Tasks]Sae Onishi and others scientific report

Overview

Hand Constraints Decrease Brain Activity and Affect Language Response Speed on Semantic Tasks

According to the theory of embodied cognition, semantic processing is closely tied to bodily movements. For example, restricting hand movement suppresses memory for objects that can be manipulated by the hand. However, it has not been confirmed whether physical restraint reduces brain activity related to semantics.

We used functional near-infrared spectroscopy to measure the effects of hand constraint on semantic processing in the parietal lobe.

Pairs of words representing the names of objects that could be manipulated by hand (such as cups or pencils) or not (such as windmills or fountains) were presented, and participants were asked to identify which object was larger.

We analyzed reaction time (RT) and activation of the left interparietal sulcus (LIPS) and left inferior parietal lobule (LIPL) (including the supramarginal and angular gyrus) in a judgment task. We found that hand movement constraints inhibited LIPS brain activity for hand-manipulable objects and affected RT in size-judgment tasks.

These results indicate that body restraint reduces activity in brain regions involved in semantics. Hand constraints can interfere with motion simulation, which can interfere with body-related semantic processing.