Kaiser Permanente is one of the largest employers in San Francisco, Alameda, and other Bay Area counties and has been an early adopter of AI.Company officials Said They rigorously test the tools they use for safety, accuracy, and fairness.

In response to KQED’s request for comment, Kaiser Permanente said in a statement: “Our physicians and care teams are always at the center of decision-making with our patients.” “We believe that AI has the potential to help physicians and employees, and improve the member experience. As an organization dedicated to inclusivity and health equity, we believe that the results from our AI tools AI does not replace human evaluation.

One of the programs used at 21 Kaiser hospitals in Northern California is Advance Alert Monitor, which analyzes electronic health data to notify nursing teams when a patient’s health status is at risk of serious deterioration. . The company says the program saves about 500 lives a year.

But Gutiérrez-Vo said nurses have pointed out problems with the tool, including sending inaccurate alerts and failing to detect all patients whose health is rapidly deteriorating. Stated.

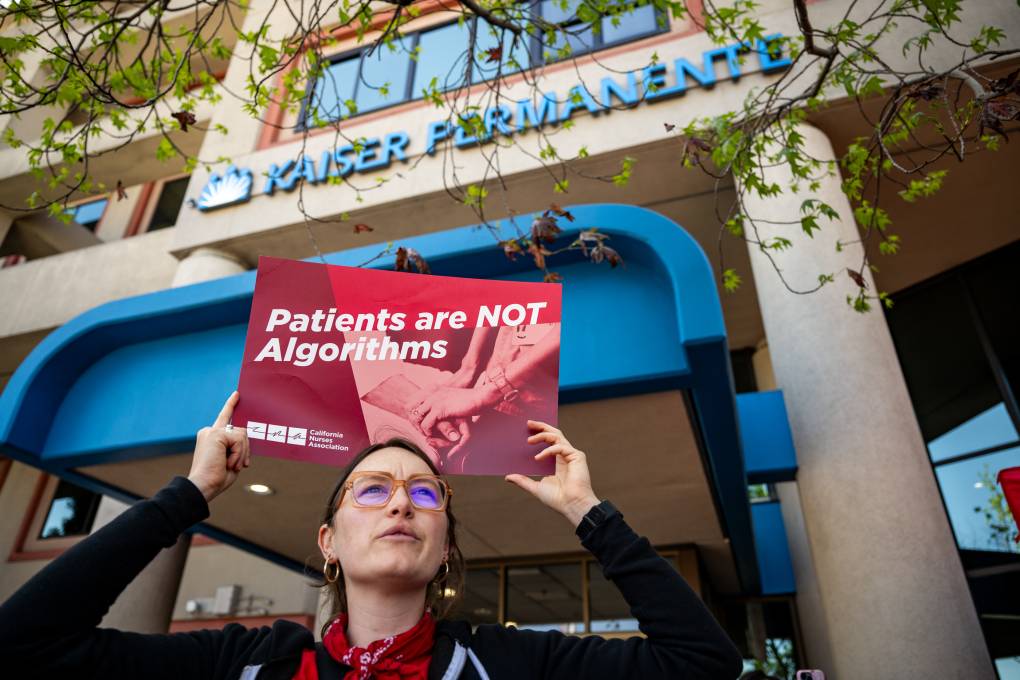

“There’s a lot of talk right now about this being the future of healthcare. These medical corporations are using this as a shortcut, as a way to deal with patient burden. And we’re saying, ‘No.’ We can’t do that without making sure these systems are secure,” said Gutierrez Vaux, a nurse with her 25 years of experience at the company’s Fremont Adult Family Medicine Clinic. “Our patients are not lab rats.”

The U.S. Food and Drug Administration has given pre-market approval to some AI-generated services, but most Without comprehensive data Essential for new drugs. Last fall, President Joe Biden issued the following statement: presidential order This includes a directive to develop policies for AI-enabled technologies in health services that promote the “well-being of patients and workers.”

“Technology is advancing at a very fast pace and everyone has a different level of understanding of what it can do, so it’s great to have an open discussion. [what] That is so,” said Dr. Ashish Atreja, chief information and digital health officer at UC Davis Health. “Many health systems and institutions have guardrails in place, but they’re probably not shared as widely. That’s why there’s a knowledge gap.”

UC Davis Health collaboration Work with other health systems to implement generative AI and other types of AI with what Atreya called “intentionality” to support employees and improve patient care.

“We have a mission to ensure that no patient, clinician, researcher, or employee is left behind in reaping the benefits of the latest technology,” Atreya said.

Dr. Robert Pearl, a lecturer at Stanford Graduate School of Business and former CEO of Permanente Medical Group (Kaiser Permanente), told KQED that nurses’ concerns about the use of AI in the workplace He said he agreed.

“Generative AI is a threatening technology, but it’s also a positive technology. What’s best for the patient? That has to be the number one concern,” says ChatGPT, co-authored with AI Systems. said Pearl, author of “MD: How AI-Empowered Patients & Doctors Can Take Back Control of American Medicine”.

“I’m optimistic about what it can do for patients,” he says. “I often tell people that generative AI is like the iPhone. It’s not going away.”